Running Helm in vRO

Running Helm directly from Aria Automation Orchestrator, as a resource action

Table of Contents

Introduction

Aria Automation provides native integration to Tanzu Kubernetes clusters. The following resource actions are available out of box:

- Apply YAML

- Delete

- Scale Worker Nodes

- Update VM Class

- Upgrade Kubernetes Version

Also we can download a Kubeconfig file, allowing full admin access to the provisioned cluster.

In this post we'll create a new resource action capable of running Helm and installing charts.

Running CLI applications from Orchestrator

The usual way of running commands from Orchestrator is logging into an external host (Linux via SSH or Windows via PowerShell-remoting). This is easy to setup but an additional component needs to be maintained.

Orchestrator is also capable of running commands on Orchestrator server host operating system, but this is disabled by default for security reasons.

Orchestator 8 introduced new languages to create scripts: Node.js, Python and Powershell. The scripts written in these languages are run in containers. Each language has its own set of default libraries (Python, PowerShell), but the list can be extended by a zip bundle.

Whatever is put into this zip file, extracted to /run/vco-polyglot/function directory. We add the helm binary to the zip file to place it onto the container.

Running a Linux binary on the container

It is important to note that the container is Linux based, so we need to download the Linux CLI tool of Helm. My first language choice was PowerShell as it is designed to run commands (PowerShell is a shell). However, I checked the memory consumption and Python was less memory hungry. Python can invoke shell commands so we can use it as a "shell".

The files extraced to /run/vco-polyglot/function are read-only and not executable, so we cannot run them directly. We need to set the eXecutable flag, but this is not possible in this read-only directory. So first we copy the binary to the root filesystem and make it exectutable:

1import os

2

3def handler(context, inputs):

4 os.system("install -m 755 helm /usr/bin")

5 os.system("helm")

6 return "done"

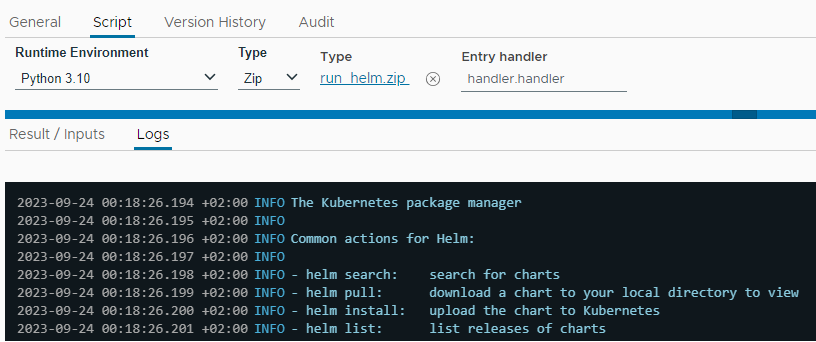

Let's save this file as handler.py, and copy the helm binary next to it, and make a .zip package of the two files named run_helm.zip. Then we create a new action, set the Runtime environment to Python and the Type to Zip, and attach the zip file. If we run the action, we can see Helm CLI output:

Invoke Helm action

The following Python code is capable of running multiple Helm commands within the same action run (only the command options are required). It also prints the standard output and standard error messages, and captures the command return code. This will allow us some basic error handling (stopping at the first error). To install charts, Helm needs a kubeconfig file, which is provided as an input to the action and saved on the container filesystem. The output of the last command is returned as the action result.

1import shlex, subprocess

2

3def handler(context, inputs):

4

5 run_command("install -m 755 helm /usr/bin")

6 run_command("mkdir -m 750 /root/.kube")

7

8 print("kubeconfig length: " + str(len(inputs["kubeconfig"])), flush=True)

9 kubeconfig = open('/root/.kube/config', 'w')

10 kubeconfig.write(inputs["kubeconfig"])

11 kubeconfig.close()

12 run_command("chmod 600 /root/.kube/config")

13

14 stdout = ""

15 for param in inputs["parameters"]:

16 result = run_command("helm " + param)

17 stdout = result.stdout.decode('utf-8').rstrip()

18 if result.returncode != 0:

19 raise Exception("Command 'helm " + param + "' failed: " + result.stderr.decode('utf-8').rstrip())

20

21 run_command("cat /sys/fs/cgroup/memory/memory.usage_in_bytes")

22 return stdout

23

24def run_command(command):

25 print("# RUN COMMAND # " + command, flush=True)

26 result = subprocess.run(shlex.split(command), capture_output=True)

27 print("# RETURN CODE # " + str(result.returncode), flush=True)

28 if result.stdout != b'':

29 print("# STDOUT #\n" + result.stdout.decode('utf-8').rstrip(), flush=True)

30 if result.stderr != b'':

31 print("# STDERR #\n" + result.stderr.decode('utf-8').rstrip(), flush=True)

32 return result

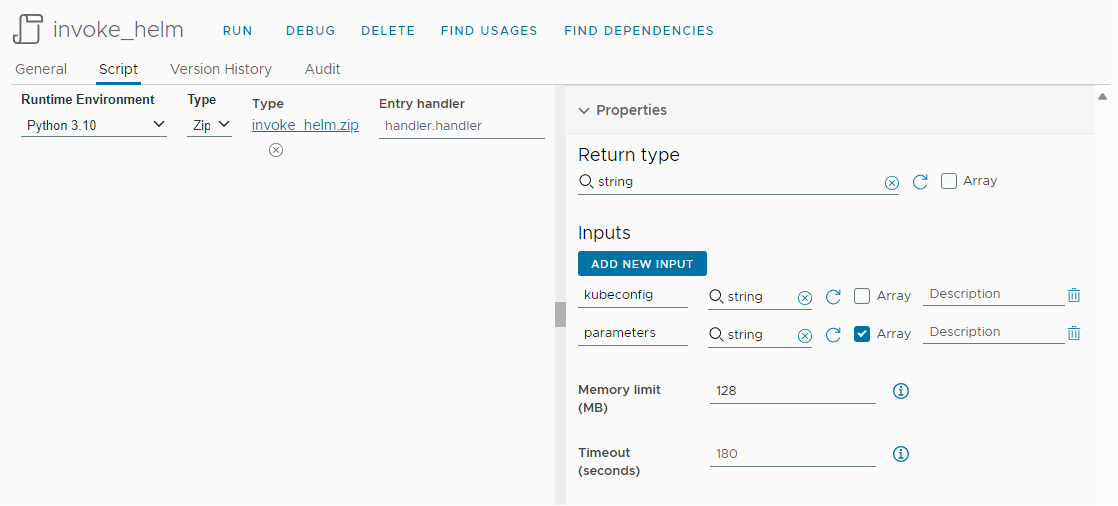

Inputs are defined, and memory limit is increased to avoid OOM:

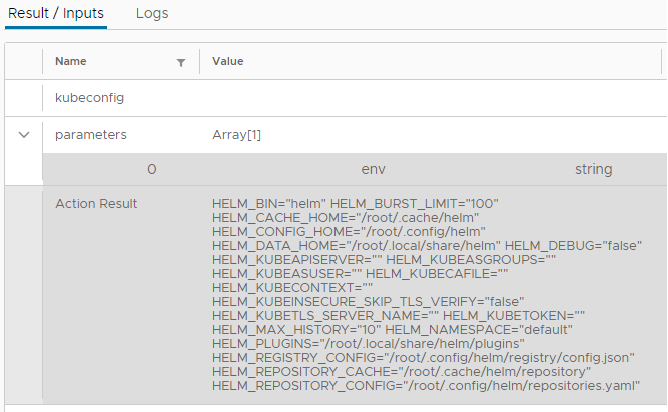

Let's run it by adding the parameter env to get Helm environment variables within the container:

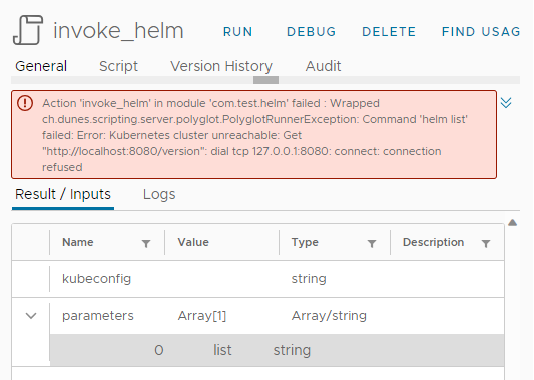

Error handling (without Kubeconfig) of helm list command (Helm error message is thrown):

Installing Helm charts on a Tanzu cluster from Aria Automation, as a resource (day 2) action

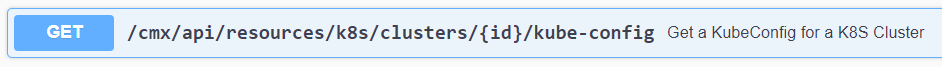

In order to communicate with the target cluster we need a Kubeconfig file. Luckily not only the Service Broker GUI user is provided with such a file, but we can download it from Aria Automation via the CMX service API:

This code will get the link to the config file:

1var resourceProperties = System.getContext().getParameter("__metadata_resourceProperties");

2kubeconfigLink = resourceProperties.__kubeconfigLink;

The next code fetches the config file in the name of the running user (having access to this resource, because running the day 2 action) via REST API (using vRA plugin):

1var vraHost = VraHostManager.createHostForCurrentUser();

2var restHost = vraHost.createRestClient();

3

4var request = restHost.createRequest("GET", kubeconfigLink);

5var response = restHost.execute(request);

6var statusCode = response.statusCode;

7var responseContent = response.contentAsString;

8

9// HTTP.OK, HTTP.CREATED, HTTP.UPDATED

10if (statusCode != 200 && statusCode != 201 && statusCode != 204) throw responseContent;

11else kubeconfig = responseContent;

Then we prepare the Helm command parameters to run by the action: adding a local repo, and installing a chart with a release name into the required namespace (all are input variables):

1parameters = [

2 "repo add util https://util.dev.local/charts/ --insecure-skip-tls-verify",

3 "install " + release + " " + chart + " --insecure-skip-tls-verify -n " + namespace

4];

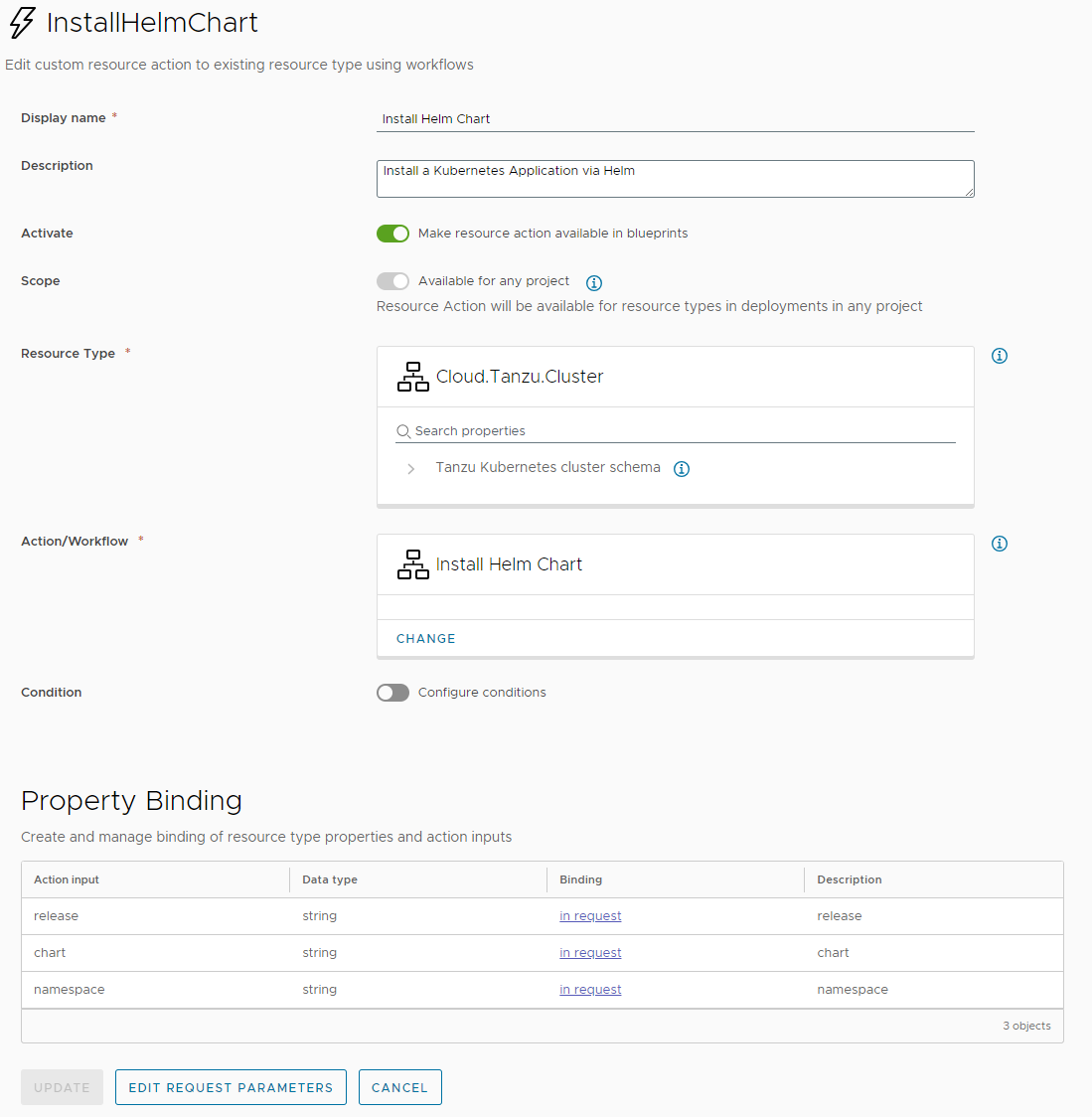

Let's create a resource action with the workflow:

Helper action: choose an available chart

We hard-coded our util repo so why not provide the user with the available charts in it. We reuse our Invoke Helm action. This time we do not need a Kubeconfig for listing, so this will be simple:

1var searchResult = System.getModule("com.test.helm").invoke_helm("no kubeconfig required", [

2 "repo add util https://util.dev.local/charts/ --insecure-skip-tls-verify",

3 "search repo util -o json"

4]);

5var charts = JSON.parse(searchResult);

6return charts.map(function (chart) { return chart.name });

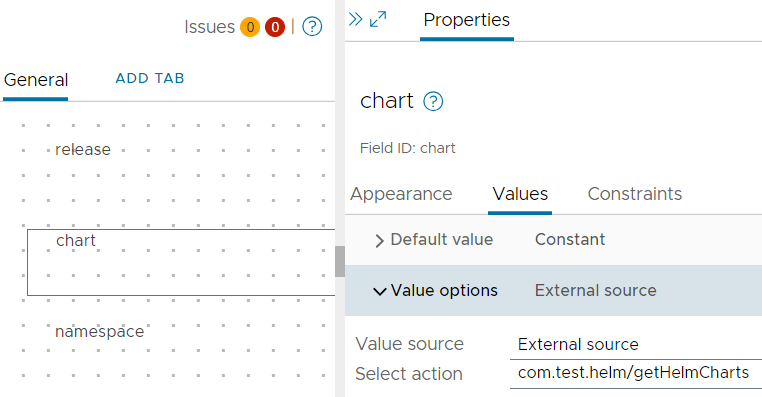

Make chart input a dropdown, and set the action to provide the list of options:

Preparing the cluster

Before we can install a chart, we need the policies to allow the cluster administrator user (the user in Kubeconfig) to create deployments, as by default:

Using Pod Security Policies with Tanzu Kubernetes Clusters

Effect of Default PodSecurityPolicy on Tanzu Kubernetes Clusters

The following behavior is enforced for any Tanzu Kubernetes cluster:

- A cluster administrator can create privileged pods directly in any namespace by using his or her user account.

- A cluster administrator can create Deployments, StatefulSets, and DaemonSet (each of which creates privileged pods) in the kube-system namespace.

Without creating a ClusterRoleBinding, we will get errors like

Error creating: pods "day2-hello-7dd74674bd-gxzwv" is forbidden: PodSecurityPolicy: unable to admit pod: []

So let's create a ClusterRole and ClusterRoleBinding (taken from Robert Guske's post) by the following yaml file:

1apiVersion: rbac.authorization.k8s.io/v1

2kind: ClusterRole

3metadata:

4 name: psp:privileged

5rules:

6- apiGroups: ['policy']

7 resources: ['podsecuritypolicies']

8 verbs: ['use']

9 resourceNames:

10 - vmware-system-privileged

11---

12apiVersion: rbac.authorization.k8s.io/v1

13kind: ClusterRoleBinding

14metadata:

15 name: all:psp:privileged

16roleRef:

17 kind: ClusterRole

18 name: psp:privileged

19 apiGroup: rbac.authorization.k8s.io

20subjects:

21- kind: Group

22 name: system:serviceaccounts

23 apiGroup: rbac.authorization.k8s.io

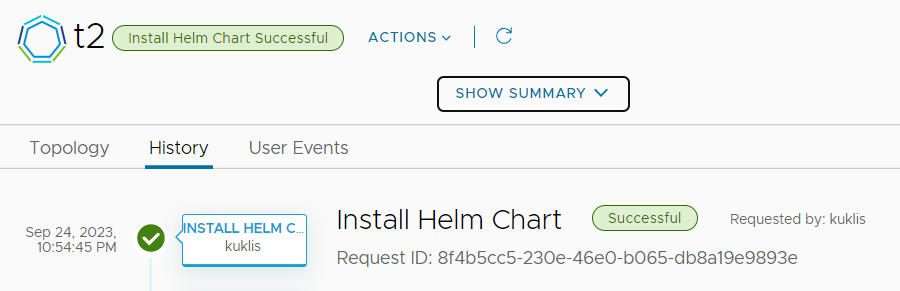

Running the day 2 action

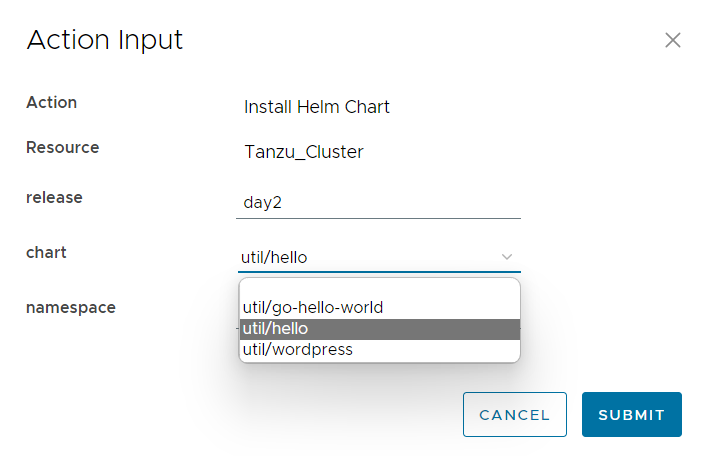

This is how the input form looks like:

The run in Service Broker:

Let's test the hello application.

1$ export KUBECONFIG=~/Downloads/t2

2$ kubectl --namespace api get svc

3NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

4day2-hello ClusterIP 10.96.48.130 <none> 8080/TCP 27m

5$ kubectl --namespace api port-forward service/day2-hello 8080:8080 &>/dev/null &

6$ curl http://127.0.0.1:8080/

7Hello, World!

82023-09-24 21:21:39.177979545 +0000 UTC

The application installed by Helm sucessfully.

Final thoughts

Orchestrator containers running polyglot functions are not designed for the purpose we used them, but it was a fun experiment. I hope you find the sample useful and may come up with new ideas how to use this method.

You can download the com.test.helm package containing the workflows and actions from GitHub: https://github.com/kuklis/vro8-packages