VCF9 Operations Orchestartor - bootstrap fix

How to fix Operations Orchestator 9 first boot

Table of Contents

Operations Orchestrator 9 issue

While setting up external Orchestrator 9.0 for VCF Automation, I faced with an old problem that prevents the appliance to properly startup kube-api-server endpoint. Let's troubleshoot the issue and present a possible solution.

The first error message

I tried to setup authentication against the automation appliance, and got the following error message.

1root@vro [ ~ ]# vracli vro authentication set -p tm -f -k -u admin -hn https://vra.vcf.lab --tenant automation

2error: error validating "/etc/vmware-prelude/vracli-command-templates/rbac.yaml": error validating data: failed to download openapi: Get "http://localhost:8080/openapi/v2?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused; if you choose to ignore these errors, turn validation off with --validate=false

32025-05-30 13:15:03,324 [ERROR] Could not create a service account for executing vro commands.

4NoneType: None

5Could not create a service account for executing vro commands.

When I tried to check the kubernetes cluster, I got error messages again.

1root@vro [ ~ ]# kubectl get po -n prelude

2E0530 13:15:24.705385 9597 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

3E0530 13:15:24.707548 9597 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

4E0530 13:15:24.709500 9597 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

5E0530 13:15:24.711222 9597 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

6E0530 13:15:24.712975 9597 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

7The connection to the server localhost:8080 was refused - did you specify the right host or port?

First I tried to fix the cluster by running /opt/scripts/deploy.sh, but it could not complete.

Checking and starting kubelet did not work either.

1root@vro [ /etc ]# systemctl status kubelet

2○ kubelet.service - kubelet: The Kubernetes Node Agent

3 Loaded: loaded (/etc/systemd/system/kubelet.service; disabled; preset: enabled)

4 Drop-In: /usr/lib/systemd/system/kubelet.service.d

5 └─20-10-k8s-config.conf

6 Active: inactive (dead)

7 Docs: http://kubernetes.io/docs/

8root@vro [ ~ ]# systemctl start kubelet

9Job for kubelet.service failed because the control process exited with error code.

10See "systemctl status kubelet.service" and "journalctl -xeu kubelet.service" for details.

Checking the logs I found a suspicious entry:

1root@vro [ ~ ]# journalctl

2...

3May 30 12:56:42 vro.vcf.lab sh[850]: Couldn't reach NTP server 172.16.0.2: [Errno 101] Network is unreachable

4May 30 12:56:42 vro.vcf.lab sh[850]: Couldn't reach NTP server 172.16.0.3: [Errno 101] Network is unreachable

5May 30 12:56:42 vro.vcf.lab sh[850]: No reachable NTP server found

6May 30 12:56:42 vro.vcf.lab sh[850]: 2025-05-30 12:56:42Z Script /etc/bootstrap/firstboot.d/00-apply-ntp-servers.sh failed, error status 1

7May 30 12:56:42 vro.vcf.lab systemd[1]: run-bootstrap.service: Main process exited, code=exited, status=1/FAILURE

8May 30 12:56:42 vro.vcf.lab systemd[1]: run-bootstrap.service: Failed with result 'exit-code'.

9May 30 12:56:42 vro.vcf.lab systemd[1]: run-bootstrap.service: Unit process 850 (tee) remains running after unit stopped.

10May 30 12:56:42 vro.vcf.lab systemd[1]: Failed to start Run firstboot or everyboot script.So firstboot service failed, and it could not properly setup the appliance.

An old regression

KB82295 describes a similar kube-api-server problem, so I checked /etc/hosts

1root@vro [ ~ ]# cat /etc/hosts

2127.0.0.1 localhost

3127.0.0.1 photon- 7b4cab7f611127.0.0.1 vra-k8s.local

Bingo! The file is corrupted. Let's fix it:

1127.0.0.1 localhost

2127.0.0.1 photon-7b4cab7f611

3127.0.0.1 vra-k8s.local

Reboot and test

Let's restart the server and see if it works now.

Kubelet started.

1root@vro [ ~ ]# systemctl status kubelet

2● kubelet.service - kubelet: The Kubernetes Node Agent

3 Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; preset: enabled)

4 Drop-In: /usr/lib/systemd/system/kubelet.service.d

5 └─20-10-k8s-config.conf

6 Active: active (running) since Fri 2025-05-30 14:55:17 UTC; 1 hour ago

7 Docs: http://kubernetes.io/docs/

8 Main PID: 12477 (kubelet)

9 Tasks: 18 (limit: 14297)

10 Memory: 144.8M

11 CPU: 0h 24min 11.136s

12 CGroup: /system.slice/kubelet.service

13 ├─ 12477 /usr/bin/kubelet --config=/var/lib/kubelet/config.yaml --kubeconfig=/etc/kubernetes/kubelet.conf --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.conf

14 ├─ 12481 /bin/bash /opt/scripts/http-watchdog 12477 20 https://vro.vcf.lab:10250/healthz --cacert /etc/kubernetes/pki/ca.crt --cert /etc/kubernetes/pki/apiserver-kubelet-clie>

15 └─182085 sleep 96

Pods are up and running.

1root@vro [ ~ ]# kubectl get po -n prelude

2NAME READY STATUS RESTARTS AGE

3contour-contour-77cbcf7fd5-ggzlt 1/1 Running 0 1h

4contour-envoy-hn6ff 2/2 Running 0 1h

5ndc-controller-manager-55b44d46b7-vpsqf 1/1 Running 0 1h

6orchestration-ui-app-7cb66d9947-7w2s2 1/1 Running 0 1h

7postgres-0 1/1 Running 0 1h

8proxy-service-54445dcf68-pv86x 1/1 Running 0 1h

9vco-app-94cf5ffb9-kx9mm 2/2 Running 0 1h

/etc/hosts file:

1root@vro [ ~ ]# cat /etc/hosts

2127.0.0.1 localhost

3127.0.0.1 photon-7b4cab7f611

4127.0.0.1 vra-k8s.local

5### k8s service hostnames follow. *.svc.cluster.local are automatically updated ###

610.244.6.164 pgpool.prelude.svc.cluster.local

710.244.7.59 proxy-service.prelude.svc.cluster.local

810.244.5.161 vco-service.prelude.svc.cluster.local

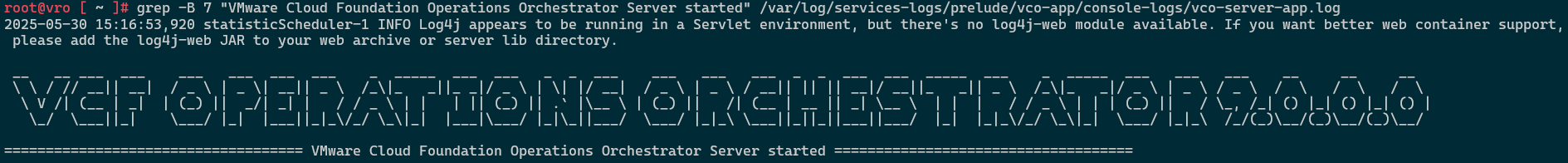

Operations Orchestrator started

HTH